Nvidia’s CEO, Jensen Huang, recently revealed that the next-generation graphics processor for artificial intelligence, known as Blackwell, will come with a price tag ranging from $30,000 to $40,000 per unit. This hefty price point is justified by the new technology required to develop this advanced chip. Huang mentioned that Nvidia had to invest around $10 billion in research and development costs to bring Blackwell to life. The chip is expected to be in high demand for training and deploying AI software, such as ChatGPT.

The estimated cost of Blackwell puts it in a similar price range to its predecessor, the H100, or the “Hopper” generation, which also ranged from $25,000 to $40,000 per chip. This price range represents a significant increase compared to previous generations, indicating the continuous innovation and advancement in Nvidia’s AI chip technology. The company typically introduces a new generation of AI chips every two years, with each iteration being faster and more energy efficient than its predecessor.

Nvidia’s AI chips, particularly the H100, have played a crucial role in driving the company’s sales over the past few years. The AI boom, triggered by advancements like OpenAI’s ChatGPT, has led to a surge in demand for powerful AI hardware. Major players in the AI industry, such as Meta, have been investing heavily in Nvidia’s H100 GPUs for training their AI models. This increased demand has not only boosted Nvidia’s revenue but has also solidified its position as a leading provider of AI hardware solutions.

Nvidia offers a range of AI chips with varying configurations to cater to different customer needs. The final price that an end consumer, like Meta or Microsoft, pays for these chips depends on various factors, such as the volume of chips purchased and the specific configuration required. Additionally, customers have the option to buy Nvidia’s chips directly or through third-party vendors who build specialized AI servers. Some servers can accommodate multiple AI GPUs, further enhancing the processing power and efficiency of AI systems.

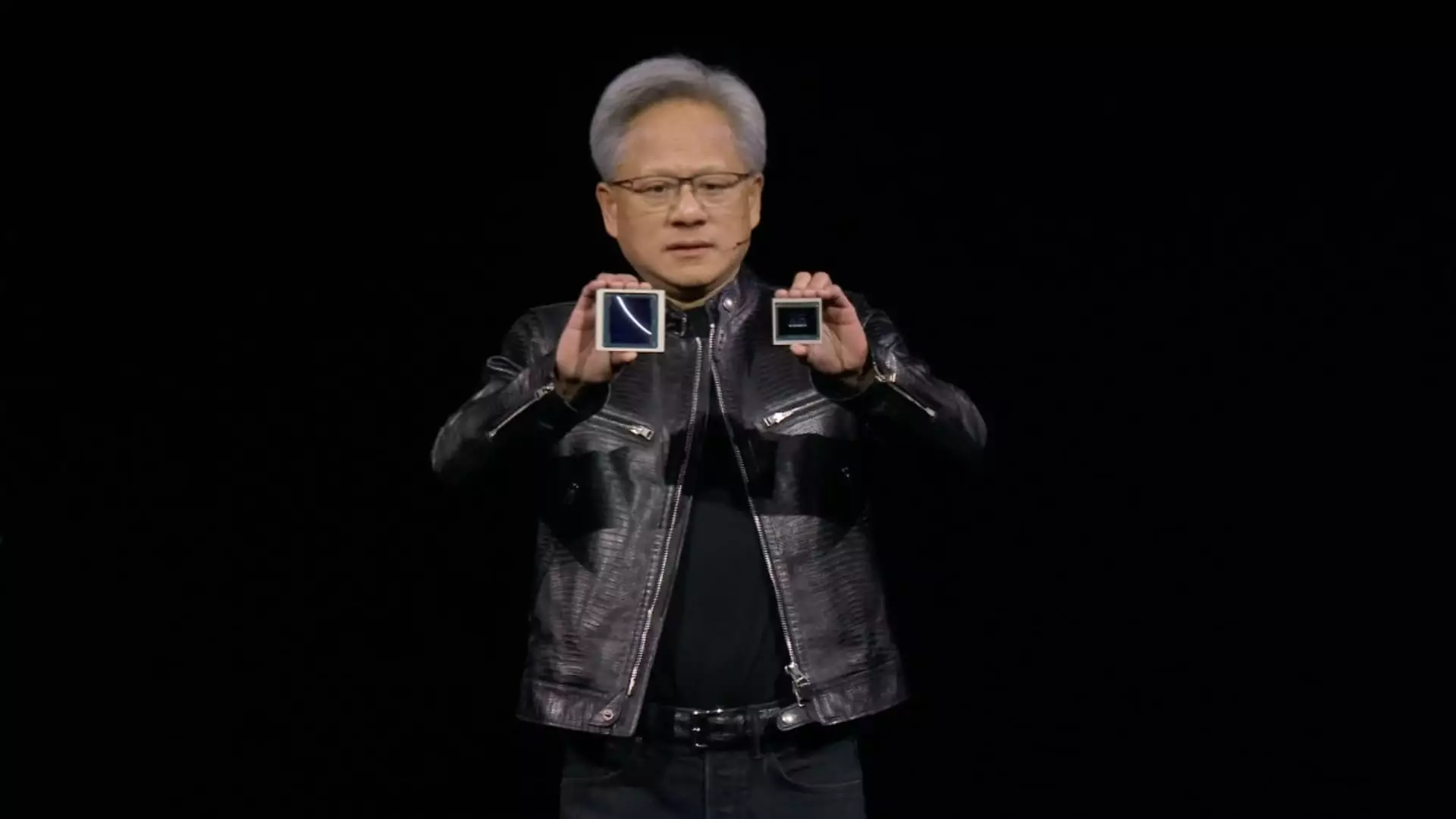

Nvidia’s recent announcement of the Blackwell AI accelerator, featuring three different versions – B100, B200, and GB200, showcases the company’s commitment to pushing the boundaries of AI technology. These accelerators combine two Blackwell GPUs with an Arm-based CPU and offer different memory configurations to meet varying performance needs. The introduction of the Blackwell series signals a new era of innovation and advancement in the field of artificial intelligence, setting the stage for further growth and development in the industry.